# News Summary: Google DeepMind Introduces AI Model That Runs Locally on Robots * **News Title**: Google DeepMind Introduces AI Model That Runs Locally on Robots * **Report Provider/Author**: PYMNTS.com / PYMNTS * **Date/Time Period Covered**: Published on June 24, 2025. The article references related developments and reports from March, April, and February of the same year. * **News Type**: Technology, Artificial Intelligence (AI), Robotics, Digital Transformation, Innovation. --- ## Main Findings and Conclusions Google DeepMind has unveiled a new **vision language action (VLA) model** named **Gemini Robotics On-Device**, designed to operate directly on robotic devices without requiring an internet connection. This advancement signifies a step towards more robust and responsive robotic systems, particularly for applications where network connectivity is unreliable or latency is critical. ## Key Features and Capabilities * **Local Operation**: The model runs entirely on the robotic device, eliminating the need for data network access. This is crucial for "latency sensitive applications and ensures robustness in environments with intermittent or zero connectivity," according to Carolina Parada, Google DeepMind's Senior Director and Head of Robotics. * **General-Purpose Dexterity**: Gemini Robotics On-Device is engineered for broad manipulation capabilities and rapid adaptation to new tasks. * **Bi-Arm Robot Focus**: The model is specifically designed for use with bi-arm robots, facilitating advanced dexterous manipulation. * **Natural Language Understanding**: It can follow instructions given in natural language, enabling intuitive control. * **Task Versatility**: The model demonstrates proficiency in a range of complex tasks, including: * Unzipping bags * Folding clothes * Zipping a lunchbox * Drawing a card * Pouring salad dressing * Assembling products * **Fine-Tuning Capability**: This is Google DeepMind's first VLA model that is available for fine-tuning by developers. This allows for customization and improved performance for specific applications. * **Rapid Task Adaptation**: The model can quickly adapt to new tasks with "as few as 50 to 100 demonstrations," showcasing its strong generalization capabilities from foundational knowledge. ## Context and Market Trends * **Building on Previous Work**: Gemini Robotics On-Device builds upon the capabilities of Gemini Robotics, which was initially introduced in March. * **Industry Shift**: The development aligns with a broader trend in Silicon Valley where large language models are being integrated into robots, enabling them to comprehend natural language commands and execute complex tasks. * **Multimodality of Gemini**: Google's strategic decision to make Gemini multimodal (processing and generating text, images, and audio) is highlighted as a path toward enhanced reasoning capabilities, potentially leading to new consumer products. * **Crowded Market**: The field of AI-powered robots capable of general tasks is becoming increasingly competitive, with several other companies also making significant advancements. ## Key Personnel Quotes * **Carolina Parada (Senior Director and Head of Robotics, Google DeepMind)**: * "Since the model operates independent of a data network, it’s helpful for latency sensitive applications and ensures robustness in environments with intermittent or zero connectivity." * "While many tasks will work out of the box, developers can also choose to adapt the model to achieve better performance for their applications." * "Our model quickly adapts to new tasks, with as few as 50 to 100 demonstrations — indicating how well this on-device model can generalize its foundational knowledge to new tasks."

Google DeepMind Introduces AI Model That Runs Locally on Robots | PYMNTS.com

Read original at PYMNTS.com →Google DeepMind introduced a vision language action (VLA) model that runs locally on robotic devices, without accessing a data network.The new Gemini Robotics On-Device robotics foundation model features general-purpose dexterity and fast task adaptation, the company said in a Tuesday (June 24) blog post.

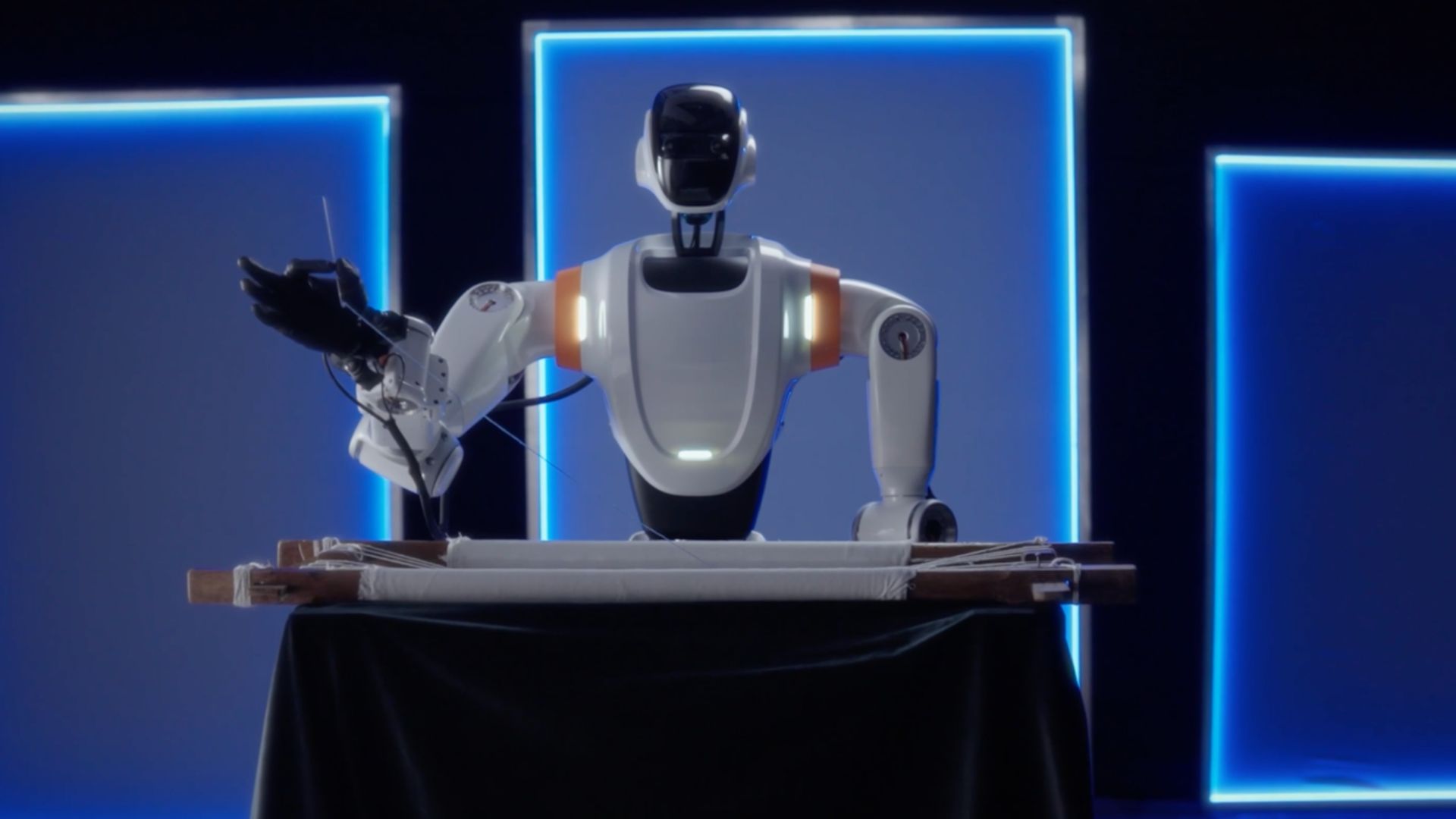

“Since the model operates independent of a data network, it’s helpful for latency sensitive applications and ensures robustness in environments with intermittent or zero connectivity,” Google DeepMind Senior Director and Head of Robotics Carolina Parada said in the post.Building on the task generalization and dexterity capabilities of Gemini Robotics, which was introduced in March, Gemini Robotics On-Device is meant for bi-arm robots and is designed to enable rapid experimentation with dexterous manipulation and adaptability to new tasks through fine-tuning, according to the post.

The model follows natural language instructions and is dexterous enough to perform tasks like unzipping bags, folding clothes, zipping a lunchbox, drawing a card, pouring salad dressing and assembling products, per the post.It is also Google DeepMind’s first VLA model that is available for fine-tuning, per the post.

“While many tasks will work out of the box, developers can also choose to adapt the model to achieve better performance for their applications,” Parada said in the post. “Our model quickly adapts to new tasks, with as few as 50 to 100 demonstrations — indicating how well this on-device model can generalize its foundational knowledge to new tasks.

”Google DeepMind’s Gemini Robotics is one of several companies’ efforts to develop humanoid robots that can do general tasks, PYMNTS reported in March.Robotics are in fashion as in Silicon Valley as large language models are giving robots the capability to understand natural language commands and do complex tasks.

The company’s advancements in Gemini Robotics show that the decision to make Gemini multimodal — taking and generating text, images and audio — is the path toward better reasoning. Gemini’s multimodality can spawn a whole new genre of consumer products for Google, PYMNTS reported in April.Several other companies are also developing AI-powered robots demonstrating advancements in general tasks, making for a crowded market, PYMNTS reported in February.

For all PYMNTS AI coverage, subscribe to the daily AI Newsletter.See More In: artificial intelligence, deepmind, digital transformation, GenAI, Google, Innovation, News, PYMNTS News, Robots, Technology, What's Hot