人形机器人与具身智能在现实世界仍面临挑战。虽有炫酷演示,但技术瓶颈在于“具身知识”缺失,难以处理真实世界的复杂性和不确定性。行业正通过“演示”与“仿真”探索,但通用人形机器人商用尚需时日,特定场景应用是当前可行方向。

Why Humanoid Robots and Embodied AI Still Struggle in the Real World

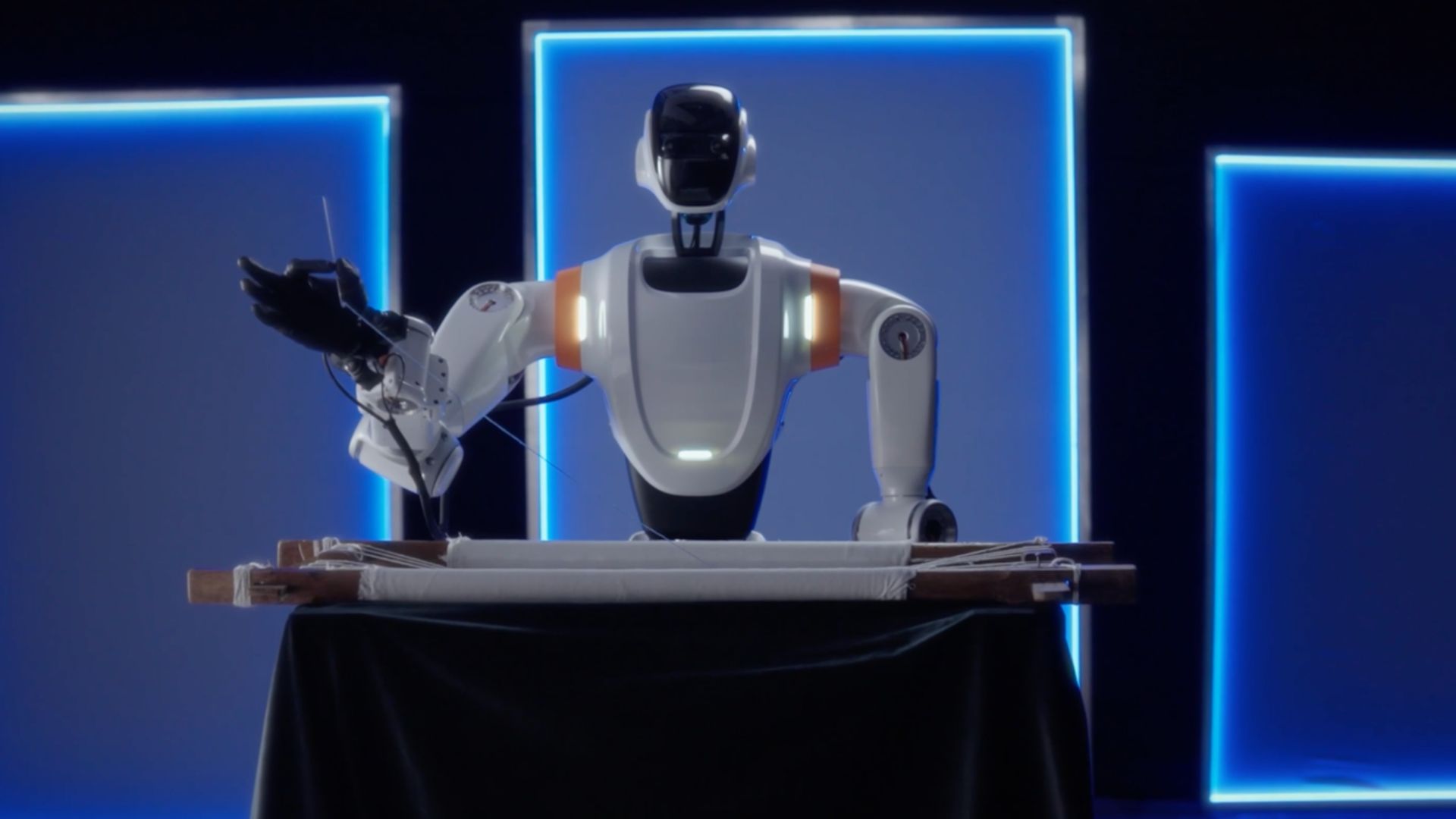

Read original at Scientific American →December 13, 20254 min readAdd Us On GoogleAdd SciAmGeneral-purpose robots remain rare not for a lack of hardware but because we still can’t give machines the physical intuition humans learn through experienceBERLIN, GERMANY SEPTEMBER 6: The NEURA Robotics humanoid robot 4NE-1 Gen 3 is on display during IFA 2025 in Berlin, Germany, on September 6, 2025.

Artur Widak/NurPhoto via Getty ImagesIn Westworld, humanoid robots pour drinks and ride horses. In Star Wars, “droids” are as ordinary as appliances. That’s the future I keep expecting when I watch the Internet’s new favorite genre: robots dancing, kickboxing or doing parkour. But then I look up from my phone, and there are no androids on the sidewalk.

By robots, I don’t mean the millions that are already deployed on factory floors or the tens of millions that consumers buy annually to vacuum rugs and mow lawns. I mean humanoid robots like C-3PO, Data and Dolores Abernathy: general-purpose humanoids.What’s keeping them off the street is a challenge robotics researchers have circled for decades.

Building robots is easier than making them function in the real world. A robot can repeat a TikTok routine on a flat surface, but the world has uneven sidewalks, slippery stairs and people that rush by. To understand the difficulty, imagine crossing a messy bedroom in the dark while carrying a bowl of soup; every movement requires constant reevaluation and recalibration.

---On supporting science journalismIf you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.---Artificial intelligence language models such as those that power ChatGPT don’t offer an easy solution.

They don’t have embodied knowledge. They’re like people who have read every book on sailing while always remaining on dry land: they can describe the wind and waves and quote famous mariners, but they don’t have a physical sense of how to steer the boat or handle the sail.“Some people think we can get the data from videos of humans—for instance, from YouTube—but looking at pictures of humans doing things doesn’t tell you the actual detailed motions that the humans are performing, and going from 2D to 3D is generally very hard,” said roboticist Ken Goldberg in an August interview with the University of California, Berkeley’s news site.

To explain the gap, Meta’s chief AI scientist Yann LeCun has noted that, by age four, a child has taken in vastly more visual information through their eyes alone than the amount of data that the largest large language models (LLMs) are trained on. “In 4 years, a child has seen 50 times more data than the biggest LLMs,” he wrote on LinkedIn and X last year.

Children are learning from an ocean of embodied experience, and the massive datasets used to train AI systems are puddles by comparison. They’re also the wrong puddle: training an AI on millions of poems and blogs won’t make it any more capable of making your bed.Roboticists have primarily focused on two approaches to closing this gap.

The first is demonstration. Humans teleoperate robotic arms, often through virtual reality, so systems can record what “good behavior” looks like. This has allowed a number of companies to begin building datasets for training future AIs.The second approach is simulation. In virtual environments, AI systems can practice tasks thousands of times faster than humans can in the physical world.

But simulation runs into the reality gap. An easy task in a simulator can fail in reality because the real world contains countless tiny details—friction, squishy materials, lighting quirks.That reality gap explains why a robot parkour star can’t wash your dishes. After the first World Humanoid Robot Games this year in Beijing, where robots competed in soccer and boxing, roboticist Benjie Holson wrote about his disappointment.

What people really want, he argued, is a robot that can do chores. He proposed a new Humanoid Olympics in which robots would face challenges such as folding an inside-out T-shirt, using a dog-poop bag and cleaning peanut butter off their own hand.It’s easy to underestimate the complexity of those tasks.

Consider something as ordinary as reaching into a gym bag crammed with clothes to find one shirt. Every part of your hand and wrist detects textures, shapes and resistance. You can recognize objects by touch and proprioception without having to remove and inspect everything.A useful parallel is a type of robot we’ve been teaching for years, usually without calling it a robot: the self-driving car.

For instance, Tesla collects data from its cars to train the next generation of its self-driving AI. Across the industry, companies have had to collect massive amounts of driving data to reach today’s levels of automation. But humanoids have a harder job than cars. Homes, outdoor spaces and construction sites are far more variable than highways.

This is why engineers design many current robots to function in clearly defined spaces—factories, warehouses, hospitals and sidewalks—and give them one job to do very well. Agility Robotics’ humanoid Digit carries warehouse totes. Figure AI’s robots work on assembly lines. UBTECH’s Walker S2 can lift and carry loads on production lines and autonomously swap out its battery.

And Unitree Robotics’ humanoid robots can walk and squat to pick up and move objects, but they’re still mostly used for research or demonstrations. Though these robots are useful, they’re still far from being a general-purpose household helper.Among those working on robotics, there is broad disagreement about how quickly that gap will close.

In March 2025 Nvidia CEO Jensen Huang told journalists, “This is not five-years-away problem, this is a few-years-away problem.” In September 2025 roboticist Rodney Brooks wrote, “We are more than ten years away from the first profitable deployment of humanoid robots even with minimal dexterity.” He also warned of the dangers that robots pose because of a lack of coordination and a risk of falling.

“My advice to people is to not come closer than 3 meters to a full size walking robot,” Brooks wrote.For now, what’s keeping Main Street from looking like a sci-fi set is that most humanoids are still in the kindergartens we’ve built for them: learning with teleoperators or in simulators. What we don’t know is how long their education will last.

When humanoid robots become commonplace, they’ll be more dynamic than today’s systems but far less flashy than the clips that go viral on TikTok. The future will still be machines doing the jobs for which they’ve been trained, day after day, without drama.It’s Time to Stand Up for ScienceIf you enjoyed this article, I’d like to ask for your support.

Scientific American has served as an advocate for science and industry for 180 years, and right now may be the most critical moment in that two-century history.I’ve been a Scientific American subscriber since I was 12 years old, and it helped shape the way I look at the world. SciAm always educates and delights me, and inspires a sense of awe for our vast, beautiful universe.

I hope it does that for you, too.If you subscribe to Scientific American, you help ensure that our coverage is centered on meaningful research and discovery; that we have the resources to report on the decisions that threaten labs across the U.S.; and that we support both budding and working scientists at a time when the value of science itself too often goes unrecognized.

In return, you get essential news, captivating podcasts, brilliant infographics, can't-miss newsletters, must-watch videos, challenging games, and the science world's best writing and reporting. You can even gift someone a subscription.There has never been a more important time for us to stand up and show why science matters.

I hope you’ll support us in that mission.