## Robots Programming Their Own Brains: A Leap Towards Autonomous Systems **News Title:** Robots can program each other’s brains with AI: scientist **Report Provider:** The Register **Author:** Thomas Claburn **Published Date:** August 7, 2025 This news report details a groundbreaking project by computer scientist Peter Burke, a professor at the University of California, Irvine, which demonstrates that a robot can program its own brain using generative AI models and existing hardware, with minimal human input. This development is described as a "first step" towards the self-aware, world-dominating robots depicted in *The Terminator*. ### Key Findings and Conclusions: * **AI-Generated Drone Control System:** Burke's research successfully used generative AI models to create a complete, real-time, self-hosted drone command and control system (GCS), referred to as a "WebGCS." This system runs on a Raspberry Pi Zero 2 W card directly on the drone, making it accessible over the internet while airborne. * **Efficiency Gains:** The AI-generated WebGCS took approximately **100 hours of human labor** over **2.5 weeks** to develop, resulting in **10,000 lines of code**. Burke estimates this is **20 times fewer hours** than a comparable project developed manually over four years. * **AI Model and Tooling:** The project involved a series of "sprints" utilizing various AI models (Claude, Gemini, ChatGPT) and AI Integrated Development Environments (IDEs) like VS Code, Cursor, and Windsurf. * **Context Window Limitations:** A significant challenge encountered was the context window limitations of AI models. When conversations (sequences of prompts and responses) exceeded the allowed token count, the models became ineffective. Burke's experience aligns with a study by S. Rando et al., which found accuracy declines significantly with increased context length. He estimates **one line of code is equivalent to 10 tokens**. * **Future Implications:** The development is seen as a glimpse into the future of spatial intelligence and autonomous systems, where sensing, planning, and reasoning are fused in near real-time. This could make aerial imagery and drone operations "radically more accessible." ### Notable Risks and Concerns: * **"The Terminator" Scenario:** Burke explicitly acknowledges the project's connection to *The Terminator* and expresses hope that the outcome depicted in the film "never occurs." This highlights the growing military interest in AI and the potential for autonomous weapon systems. * **Adversarial and Ambiguous Environments:** Hantz Févry, CEO of Geolava, points out that the real test for these systems will be their ability to handle adversarial or ambiguous environments, as opposed to controlled simulations. Adapting to changing terrain, mission goals, or system topology mid-flight remains a critical challenge. * **Safety Boundaries:** Févry also emphasizes the strong belief that "hard checks and boundaries for safety" are crucial for such advanced drone systems. ### Technical Details and Metrics: * **Drone Hardware:** The drone was equipped with a **Raspberry Pi Zero 2 W**. * **WebGCS Implementation:** The system runs a **Flask web server** on the Raspberry Pi. * **Code Volume:** The final AI-generated WebGCS comprised **10,000 lines of code**. * **Development Time:** The successful sprint took **2.5 weeks** with approximately **100 hours of human labor**. * **AI Model Context:** The project encountered issues with AI models exceeding their **token context windows**. ### Contextual Information: * **Traditional GCS:** Typically, drone control systems (GCS) run on ground-based computers and communicate with drones via wireless telemetry links. Examples include Mission Planner and QGroundControl. * **Drone's "Brain":** The report defines a drone's "brain" as a multi-layered system: * **Lower-level:** Drone firmware (e.g., Ardupilot). * **Intermediate:** The GCS, handling real-time mapping, mission planning, and drone configuration. * **Higher-level:** Systems like the Robot Operating System (ROS) for autonomous collision avoidance. * **Human Oversight:** A redundant transmitter under human control was maintained during the project for manual override if necessary. In conclusion, Peter Burke's research signifies a significant advancement in AI's capability to autonomously develop complex control systems for robots. While offering immense potential for efficiency and accessibility in areas like spatial intelligence, the project also raises important ethical considerations and technical challenges regarding safety and robustness in real-world, unpredictable environments.

Robots can program each other’s brains with AI: scientist

Read original at The Register →Computer scientist Peter Burke has demonstrated that a robot can program its own brain using generative AI models and host hardware, if properly prompted by handlers.The project, he explains in a preprint paper, is a step toward The Terminator."In Arnold Schwarzenegger’s Terminator, the robots become self-aware and take over the world," Burke's study begins.

"In this paper, we take a first step in that direction: A robot (AI code writing machine) creates, from scratch, with minimal human input, the brain of another robot, a drone."Autonomous capture is no longer a luxury but a foundation for spatial AIBurke, a professor of electrical engineering and computer science at the University of California, Irvine, waits until the end of his paper to express his hope that “the outcome of Terminator never occurs."

While readers may assume as much, that's not necessarily a given amid growing military interest in AI. So there's some benefit to putting those words to screen.The Register asked Burke whether he'd be willing to discuss the project but he declined, citing the terms of an embargo agreement while the paper, titled "Robot builds a robot’s brain: AI generated drone command and control station hosted in the sky," is under review by Science Robotics.

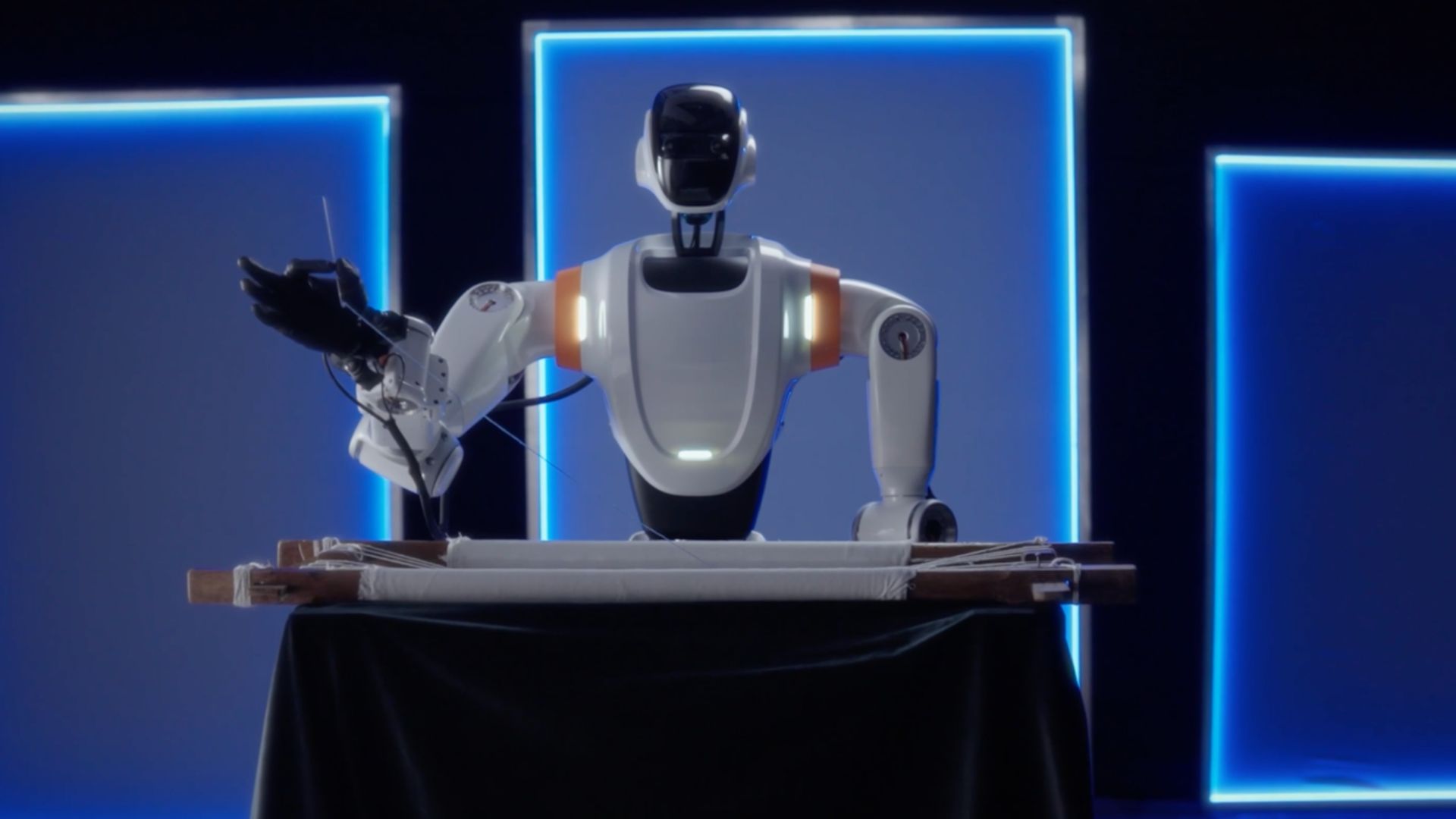

The paper uses two specific definitions for the word “robot”. On describes various generative AI models running on a local laptop and in the cloud that programs the other robot - a drone equipped with a Raspberry Pi Zero 2 W, the server intended to run the control system code.Usually, the control system, or ground control system (GCS), would run on a ground-based computer that would be available to the drone operator, which would control the drones through a wireless telemetry link.

Mission Planner and QGroundControl are examples of this sort of software.The GCS, as Burke describes it, is an intermediate brain, handling real-time mapping, mission planning, and drone configuration. The lower-level brain would be the drone's firmware (e.g. Ardupilot) and the higher-level brain would be the Robot Operating System (ROS) or some other code that handles autonomous collision avoidance.

A human pilot may also be involved.What Burke has done is show that generative AI models can be prompted to write all the code required to create a real-time, self-hosted drone GCS – or rather WebGCS, because the code runs a Flask web server on the Raspberry Pi Zero 2 W card on the drone. The drone thus hosts its own AI-authored control website, accessible over the internet, while in the air.

The project involved a series of sprints with various AI models (Claude, Gemini, ChatGPT) and AI IDEs (VS Code, Cursor, Windsurf), each of which played some role in implementing an evolving set of capabilities.The initial sprint, for example, focused on coding a ground-based GCS using Claude in the browser.

It included the following prompts:Prompt: Write a Python program to send MAVLink commands to a flight controller on a Raspberry Pi. Tell the drone to take off and hover at 50 feet.Prompt: Create a website on the Pi with a button to click to cause the drone to take off and hover.Prompt: Now add some functionality to the webpage.

Add a map with the drone location on it. Use the MAVLink GPS messages to place the drone on the map.Prompt: Now add the following functionality to the webpage: the user can click on the map, and the webpage will record the GPS coordinates of the map location where the user clicked. Then it will send a "guided mode" fly-to command over MAVLink to the drone.

Prompt: Create a single .sh file to do the entire installation, including creating files and directory structures.The sprint started off well, but after about a dozen prompts the model stopped working because the conversation (the series of prompts and responses) consumed more tokens Claude's context window allowed.

Germany and Japan teamed their ISS robots for seek-and-photograph missionUS sends 33,000 smart 'strike kits' to make Ukrainian drones even deadlierUkrainian hackers claim to have destroyed major Russian drone maker's entire networkAmerica and Britain gear up with Project Flytrap to bring anti-drone kit to the battlefieldSubsequent attempts with Gemini 2.

5 and Cursor each ran into issues. The Gemini session was derailed by bash shell scripting errors. The Cursor session led to a functional prototype, but developers needed to refactor to break the project up into pieces small enough to accommodate model context limitations.The fourth sprint using Windsurf finally succeeded.

The AI-generated WebGCS took about 100 hours of human labor over the course of 2.5 weeks, and resulted in 10K lines of code.That's about 20 times fewer hours than Burke estimates were required to create a comparable to a project called Cloudstation, which Burke and a handful of students developed over the past four years.

One of the paper's observations is that current AI models can't handle much more than 10,000 lines of code. Burke cited a recent study (S. Rando, et al.) about this that found the accuracy of Claude 3.5 Sonnet on LongSWEBench declined from 29 percent to three percent when the context length increases from 32K to 256K tokens, and said his experience is consistent with Rando's findings, assuming that one line of code is the equivalent of 10 tokens.

Hantz Févry, CEO of spatial data biz Geolava, told The Register in an email that he found the drone project fascinating."The idea of a drone system autonomously scaffolding its own command and control center via generative AI is not only ambitious but also highly aligned with the direction in which frontier spatial intelligence is heading," he said.

"However, I strongly believe there should be hard checks and boundaries for safety."The paper does note that a redundant transmitter under human control was maintained during the drone project in case manual override was required.Based on his experience running Geolava, Févry said the emergence of these sorts of systems marks a shift in the business of aerial imagery."

Aerial imagery is becoming radically more accessible," he said. "Autonomous capture is no longer a luxury but a foundation for spatial AI, whether from drones, stratospheric, or the LEO (low earth orbit) capture. Systems like the one described in the paper are a glimpse of what’s next, where sensing, planning, and reasoning are fused in near real-time.

Even partially automated platforms like Skydio are already reshaping how environments are sensed and understood."Févry said the real test for these systems will be how well generative AI systems can handle adversarial or ambiguous environments."It’s one thing to scaffold a control loop in simulation or with prior assumptions," he explained.

"It’s another to adapt when the terrain, mission goals, or system topology changes mid-flight. But the long-term implications are significant: this kind of work foreshadows generalizable autonomy, not just task-specific robotics."We leave you with the words of John Connor, from Terminator 3: Rise of the Machines: "The future has not been written.

There is no fate but what we make for ourselves." ®